What is artificial intelligence?

There was an exchange on Twitter a while back where someone said, ‘What is artificial intelligence?’ And someone else said, ‘A poor choice of words in 1954’.

— Ted Chiang, “The machines we have now are not conscious”, Financial Times

Artificial intelligence (AI) is formally defined as the branch of computer science focused on systems capable of performing tasks that typically require human intelligence, such as reasoning, decision-making, and problem-solving. Unfortunately, defining a technology by the types of problems it solves is vague and not particularly helpful. For instance:

- If you build a sophisticated spreadsheet model that analyzes data and generates a prediction, have you created AI?

- If a grammar checker claims to use AI but is built with traditional programming techniques rather than modern AI approaches, should you consider it artificial intelligence?

In one sense, the label “AI” doesn’t matter. If a tool solves a problem, who cares what technical architecture it was built on? On the other hand, creating a strategy to turn a groundbreaking new technology into measurable business outcomes requires understanding the basics of how the technology works to help you decipher both what it is good at and how to use it more effectively.

Beyond being vague, the term “artificial intelligence” is problematic because it reinforces the myth that AI systems understand what they’re doing. Even today’s advanced architectures do statistical calculations on data, not reasoning as a human would. To understand the distinction between computation and comprehension, consider John Searle’s famous 1980 thought experiment, the “Chinese Room Argument”:

Beyond being vague, the term “artificial intelligence” is problematic because it reinforces the myth that AI systems understand what they’re doing. Even today’s advanced architectures do statistical calculations on data, not reasoning as a human would. To understand the distinction between computation and comprehension, consider John Searle’s famous 1980 thought experiment, the “Chinese Room Argument”:

Searle imagines himself alone in a room following a computer program [rules] for responding to Chinese characters slipped under the door. Searle understands nothing of Chinese, and yet, by following the program for manipulating symbols and numerals just as a computer does, he sends appropriate strings of Chinese characters back out under the door, and this leads those outside to mistakenly suppose there is a Chinese speaker in the room.

— “The Chinese Room Argument”, Stanford Encyclopedia of Philosophy

Searle makes language processing sound disarmingly simple: just follow the rules. But language in the wild is complex, context-sensitive, ambiguous, and ever-changing. In short, it defies rules. Processing language has always been one of the biggest challenges in computer science. That’s why language processing has become a benchmark for AI capability. And why recent breakthroughs in generative AI have gained so much attention.

The modern AI paradigm for language processing, Large Language Models (LLMs), yields a lot better results than previous approaches. However, LLMs still only mimic human reasoning by using probability to iteratively predict the next word in a string of text based on a complex analysis of all previous words.

Because AI has no way to check its prediction against real-world experience, its pattern-matching algorithm can occasionally produce errors (commonly referred to as hallucinations). Some errors are so obvious that even an elementary school student would immediately recognize them. We laugh at these. Other errors can sound surprisingly convincing, even to those with domain expertise. LLMs have even been known to make up quotes, citations, or other evidence to support their erroneous conclusions. That’s why using LLMs without understanding and mitigating the risks inherent in how they work can be catastrophic.

Key AI concepts

Before we look more specifically at how various AI architectures work, it’s important to grasp a few core AI concepts. In addition to making the difference in AI architectures easier to understand, you will see these concepts pop up frequently in AI articles and discussions. Understanding them will help your AI knowledge progress faster.

Tokens

Modern AI architectures break their training data into small, unique numerical representations called tokens. For language processing, a token can be a whole word, a part of a word, or even a symbol such as punctuation. For example, the word “tokenization” might be split into tokens like “token” and “ization”. Each model has a fixed vocabulary of these tokens that it can recognize and work with.

In simple explanations of AI language models, it’s common to substitute “word” for “token” because it’s intuitively easier to understand. I use this approach. Just remember that LLMs aren’t actually predicting the next word, they are predicting the next token.

Tokens aren’t limited to language processing. Other media can also be broken down into tokens for processing:

- Images: Patches of pixels

- Audio: Sound segments

- Video: Spatio-temporal segments

Feature engineering

In AI, features are specific, measurable characteristics of data that help make predictions or classifications. For example, in a house price prediction system, features might include square footage, location, and number of bedrooms. Feature engineering is the process of identifying these useful characteristics.

In early AI systems, humans had to manually identify and create these features. For image recognition, this meant explicitly telling the system to look for specific aspects like edges, colors, or shapes. Human feature engineering was time-consuming, required domain expertise, and was limited by human intuition about what features matter.

One of the most important breakthroughs of modern deep learning is its ability to automatically discover important features on its own. When shown millions of cat photos, a deep learning system builds up its understanding in layers: early layers might detect basic features like edges and textures, middle layers might combine these into patterns like whiskers or fur, and deeper layers might recognize complex concepts like ‘cat sleeping’ or ‘cat playing.’ Automatic feature engineering allows the system to discover subtle patterns that humans might not have thought to specify.

Modern AI often combines both automated feature learning where possible with human-engineered features where domain expertise adds value.

Structured vs unstructured data

Early AI systems could only work with structured data, meaning information that was carefully organized, cleaned, and labeled by humans. Imagine being handed a filing cabinet where every folder is perfectly labeled and organized versus a messy desk covered in random papers. Early AI could only handle the filing cabinet.

Today’s AI systems, particularly deep learning models, can work with unstructured data like natural language, images, or audio in their raw form. This is revolutionary because most of the world’s information exists in unstructured forms. However, there’s a trade-off. While modern AI can handle messier data, it needs massive amounts of it to learn effectively—think millions of examples rather than hundreds. This helps explain why companies with access to large amounts of data (like Google, Meta, or Amazon) have been at the forefront of AI development.

Prediction transparency

The evolution of AI represents a trade-off between power and transparency. With early AI, you could see how decisions were made because the system followed clear, human-written rules. Modern deep learning systems are more like black boxes. They make better decisions, but it’s much harder to understand exactly how they arrive at those decisions.

This trade-off has important implications for business use of AI. An AI system that can accurately predict which customers will churn—but can’t tell you why—might have value for revenue forecasting. However, it is of limited value if the goal is to find ways to improve customer retention. Sometimes organizations need to choose between a more powerful but opaque AI system and a simpler but more transparent one.

Deterministic vs probabilistic output

Computer technologies are generally deterministic. Like a calculator, they always give the same output for the same input. But generative AI is different.

Generative AI works by predicting what comes next based on patterns in their training data. For each decision (like choosing the next word), the model assigns probabilities to many possible options. For example, after “The cat sat on the…”, the model might think “mat” has a 30% chance of being next, “chair” has 20%, and so on.

Rather than always choosing the highest probability option, models use controlled randomness in their selections. This helps them generate more natural and varied responses while avoiding getting stuck in repetitive patterns or becoming too rigid in their outputs. But getting a different answer each time when using the same prompt makes some AI use cases quite challenging.

Programmers who create AI apps by calling a model’s API can directly control how variable the response is. Conservative settings heavily favor high-probability choices and produce more consistent, focused results. Higher variability settings result in the probability distribution being flattened, allowing lower probability options to be selected more frequently. This produces more diverse and creative outputs, but with potentially less precision.

You can’t explicitly set the variability of a model from a chatbot interface. However, you can use prompting techniques to tell the model how conservatively or creatively you want the model to respond. Some chatbots, such as Microsoft’s Copilot, have a separate “creative mode” designed to produce more varied output.

How AI works

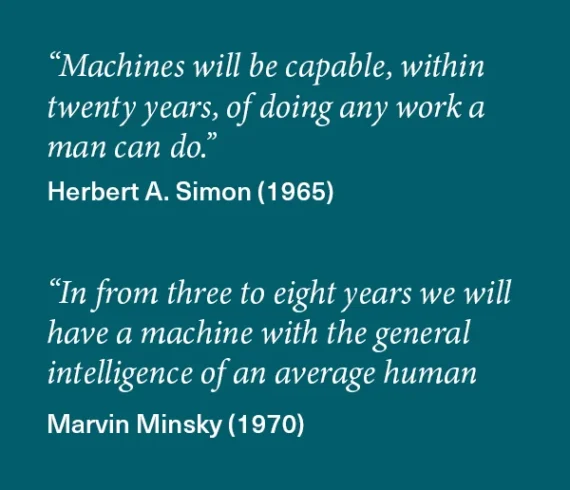

AI has suddenly sprung into mainstream conversations. But it isn’t new. The term artificial intelligence was coined in the 1950s. And there was prior work that laid the foundations, even if AI hadn’t been named yet. That means researchers have been working on AI for at least 75 years.

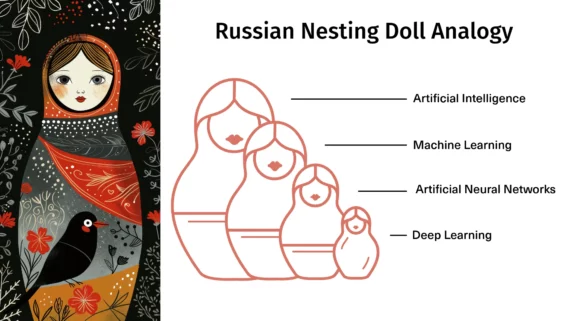

AI architectures can be described using the analogy of a Russian nesting doll, with the broad term “artificial intelligence” being the outermost doll. Each architecture is a subset of the architectures above it. Therefore, all the terms shown accurately describe today’s generative AI. However, “deep learning” is the most specific and the most frequently used.

As you move from the broad term artificial intelligence to deep learning, you are essentially tracing the evolution of AI. However, earlier architectures are not obsolete. Because they are simpler, less costly to create and deploy, and have unique abilities, they are still used for appropriate use cases. They’re just not particularly good at natural language processing.

Artificial intelligence

Artificial Intelligence (AI) is an umbrella term that encompasses AI approaches from its earliest days to the present. However, since we associate contemporary AI with machine learning and the architectures that evolved from it, such as deep learning, this outermost layer of the AI doll is also a good place to describe the AI architectures that came before machine learning.

The language Searle uses in his Chinese Room Argument—a man following rules—provides a clue about the AI architecture of his era. From the 1950s to the 1980s, most AI consisted of a series of rules created with traditional programming techniques. These systems are referred to formally as symbolic AI. You may occasionally hear them informally called “Good Old Fashioned AI” (GOFAI).

How symbolic AI works

Symbolic AI was built on knowledge representation plus a series of if-then statements and decision trees. While early AI developers may have had loftier aspirations for what was possible with computers, the architecture they used wasn’t much different from that of other software of the day.

Limitations of symbolic AI

Symbolic AI was rigid and brittle—it could only handle the specific scenarios it was programmed for. Any deviation from the expected input would cause failures. Improving outputs required rewriting code, a time-consuming and error-prone process.

Common use cases for symbolic AI

Applications of symbolic AI include:

- Expert systems for medical diagnosis

- Early chatbots like ELIZA

- Basic decision support systems

For natural language processing, these systems could perform simple tasks such as rule-based grammar checking and simple keyword matching. But they could not ascertain context or meaning.

Symbolic AI is still used for simple tasks where deterministic output is desirable. It can be quite useful when combined with more modern AI approaches. But in terms of the evolution of AI, it has no direct descendants.

Machine Learning

Machine Learning revolutionized AI because it makes predictions based on patterns in data rather than hand-coded rules. In addition to predictions, machine learning can perform tasks such as clustering data that is alike or identifying anomalies in data.

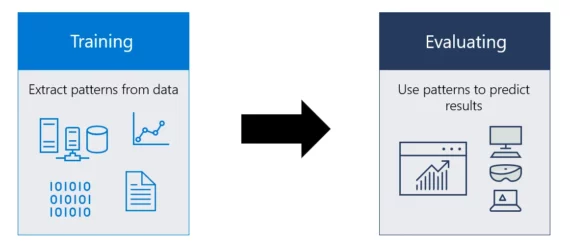

How machine learning works

Making predictions with machine learning happens in two stages. First, during an iterative training process, machine learning algorithms analyze a dataset to identify associations and patterns in the data. These patterns (not the data itself) are stored as numerical parameters in a model. Then, the model is used to make predictions on new data that wasn’t in the original dataset. The process of generating predictions from new data is called inference.

Image source: Microsoft, “What is a machine learning model?”

Advantages of machine learning

Although early machine learning couldn’t do automatic feature extraction, it did provide the ability to deal with patterns that are difficult to express as explicit rules. As a result, it was better at generalizing on new, unseen data. It was also far more flexible than symbolic AI because it could improve and adapt to new environments with additional training.

Compared to earlier rules-based AI, early machine learning was better at:

- Dealing with uncertainty

- Learning from examples without explicit programming

- Processing numerical data

Limitations of machine learning

Although the ability to learn and extrapolate from data was groundbreaking, early incarnations of machine learning still had significant weaknesses:

- Required careful human feature engineering

- Had limited ability to handle complex patterns

- Needed clean, structured data

Basic machine learning was not very good for natural language processing or other data with multi-dimensional or non-linear relationships. Nor did it work well with large datasets. However, the paradigm shift from human-created rules to learned-pattern-matching set a new trajectory for future AI systems that would eventually revolutionize AI capability.

Common use cases for machine learning

Machine learning algorithms greatly expanded the range of tasks that computers could be used for. Common applications for basic machine learning include:

- Fraud detection

- Customer segmentation

- Basic recommendation systems

Neural Networks

Neural networks are a type of machine learning inspired by the structure of the human brain. Like all machine learning, neural networks use a trained model to make predictions from patterns in data. However, under the hood, neural networks operate in a fundamentally different way.

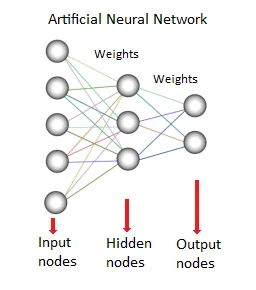

How neural networks work

An artificial neural network processes information through layers of interconnected artificial neurons (also called nodes or perceptrons). Each layer performs specific transformations on the data. For example, in a text classification system, the input layer might receive encoded words from a customer review, hidden layers process and transform this data, and the output layer produces probabilities for different categories (‘positive’: 80%, ‘negative’: 20%).

Image source: “Artificial Neural Network”, www.saedsayad.com

Artificial neurons aren’t exactly like biological neurons. However, they have two important properties that are analogous:

Activation: Each artificial neuron either fires or stays quiet based on its built-in activation threshold and the strength of the signals it receives.

Connection Strength: Connections between neurons aren’t binary. Neurons can have strong or weak influences on each other. The network learns by adjusting the connection weights between neurons.

Early neural networks, now called shallow neural networks, typically had only one or two hidden layers. While groundbreaking for their time, they were limited in their ability to learn complex patterns. Modern deep learning networks, by contrast, might have dozens of layers, allowing them to build up a much more sophisticated understanding of patterns in data.

Advantages of neural networks

Even early neural networks provided important capabilities:

-

Modeling patterns that don’t follow simple straight-line relationships

-

Processing multiple inputs simultaneously through parallel computation

-

Learning useful features from data automatically

Limitations of neural networks

However, early neural networks still faced significant constraints:

- Could only learn relatively simple relationships

- Needed significant processing power even for basic tasks

- Tended to memorize training data rather than learning general patterns (overfitting)

- Could be unstable during learning and sensitive to initial settings

Common use cases

Shallow neural networks were commonly used in applications such as

- Optical character recognition

- Simple speech recognition

- Basic image classification and sentiment analysis

While shallow neural networks are still useful for simple tasks or resource-constrained environments, most modern AI applications use deep learning architectures that overcome the limitations of shallow neural networks by adding additional layers of neurons along with improved algorithms.

Deep Learning

Deep learning, the architecture that gave rise to generative AI, is a larger, more complex version of an artificial neural network. Instead of a few hidden layers, it can have dozens. More layers of neurons combined with increasingly sophisticated algorithms allow deep learning networks to deal with the complexity and nuance of language, images, audio, and video.

How deep learning works

In the first era of deep learning, individual architectures evolved to target specific types of data. For example, Recurrent Neural Networks (RNNs) were the primary architecture for natural language applications and other sequential data.

RNNs process text one element at a time and in only one direction. That means errors that occur near the beginning of processing continue to propagate through the results, compounding as the analysis progresses. The linear process is also slow, which places a practical limit on the size of training datasets. Nor are RNNs good at capturing dependencies in words that were far apart in the text.

The transformer + self-attention revolution

The deep learning advance that enabled generative AI as we know it came in 2017 when Google AI researchers published a groundbreaking research paper entitled Attention is All You Need. This paper introduced the world to transformers and self-attention—the second generation of deep learning.

Transformers are a specific deep learning architecture designed primarily for sequence-to-sequence tasks such as natural language processing. Transformers allow much more efficient parallel processing than earlier approaches, increasing processing speed by an order of magnitude and making it practical to work with huge datasets.

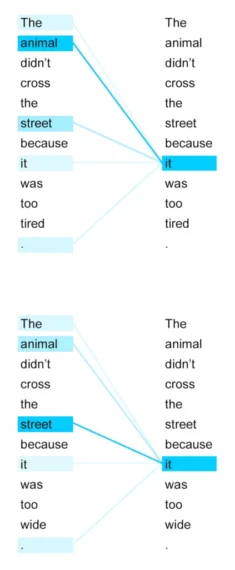

Self-attention is a mechanism inside transformers that allows the model to calculate the relative importance of words in a sequence and to disambiguate meaning by connecting words that go together, even when the words do not appear near each other. It does this by allowing each word in a text sequence to “look at” every other word when determining context.

This diagram below illustrates how self-attention works by comparing two similar sentences:

- The animal didn’t cross the street because it was too tired.

- The animal didn’t cross the street because it was too wide.

The blue lines and highlighting show how the word “it” in each sentence connects back to what it’s referring to. In the first sentence, “it” connects strongly to “animal” because tiredness is a property of an animal. In the second sentence, “it” connects strongly to “street” because the width is a property of a street.

Image source: “A Comprehensive Guide to ‘Attention Is All You Need’ in Transformers”, deepai.tn

Unlike first-generation deep learning, transformer-based deep learning isn’t limited to analyzing text in only one direction. So it reduces the problem of cascading errors and connecting words that are far apart.

You can imagine RNNs as assembly line workers, each passing information to the next in a fixed sequence. Transformers, on the other hand, are like a team of experienced investigators, all examining a criminal case simultaneously. Each investigator (attention head) can focus on different aspects of the evidence, looking for patterns and connections across the entire case. Investigators can easily relate clues from the beginning of the file to those at the end. Working together allows the investigators to form a comprehensive understanding of the case. And to solve it much more quickly.

The magic of large datasets

It turns out that attention isn’t all you need for human-like language processing. You also need humongous datasets. AI researchers discovered this more or less by accident.

OpenAI’s GPT-2 model was trained on 9 million pages of web text (40 gigabytes). That sounds like a lot. But the results weren’t earth-shattering. A reviewer described GPT-2’s output this way:

The prose is pretty rough, there’s the occasional non-sequitur, and the articles get less coherent the longer they get.

— “GPT-2”, Wikipedia (7/18/24)

The model that vaulted generative AI into the public consciousness in 2020, GPT-3, used the same basic architecture as GPT-2. But GPT-3 was trained on 45 terabytes of web text—over 1,000 times as much as GPT-2. At this scale, the GPT-3 model crossed a tipping point, beginning to produce language so human-like that it caught AI researchers by surprise.

Experts estimate that GPT-4’s dataset was approximately one petabyte (1,000 terabytes). GPT-4-class models have even more human-like capabilities, including understanding dialects, recognizing the emotional dimension of language, and solving complex problems by synthesizing information from multiple sources or subject matter domains.

Models tend to come in families of different sizes, ranging from tiny to large (with size being measured by the number of parameters). Small models are useful because they respond faster and require far fewer computing resources to run. Some can even be run on a phone. The largest models are often referred to as frontier models because they represent the state-of-the-art at the time of their release. But they are costly to build and deploy, as well as comparatively slow when generating predictions.

The size of a model is so important that parameter count has been a common way to estimate a model’s capability. But this is changing. Because of the time and cost involved in creating and deploying very large models, AI builders are finding ways to reduce the size of frontier models. Many current frontier models are smaller than their predecessors while maintaining, or even improving, their capability compared to the previous version.

Limitations of deep learning

The rapid development of AI has created a complicated landscape of new algorithms and techniques that are hard to characterize. Broadly speaking, the most common challenges of transformer-based deep learning include:

- Needing massive amounts of data.

- Often requiring extensive human feedback when fine-tuning models.

- Being computationally intensive, which has implications both for cost and for the natural resources such as energy and water needed for large data centers.

- Inability to edit models without time-consuming re-training.

- Susceptibility to social bias absorbed from web data as well as deliberate malicious use.

If this strikes you as a long and significant list of limitations compared to earlier AI technology, you’re not wrong. Creating systems that communicate in a way that feels organic to humans opens up a lot of technical and social challenges that less capable versions of AI never encountered because they simply weren’t good enough.

On the other hand, it’s also true that these challenges are active areas of research. Deep learning is improving rapidly. And, just as each new architecture in our Russian doll analogy was invented to address weaknesses in the previous one, we will eventually see new architectures emerge that address the challenges of deep learning (and undoubtedly introduce new ones).

Common use cases for deep learning

Generative AI is such a flexible technology that it’s impossible to list all of its possible use cases. New ones are being discovered by enterprising AI users all the time. However, here’s a partial list of ways generative AI can be used in the training industry.

|

|

This list should be thought of as a source of inspiration rather than a shopping list. Tasks that make sense for one organization may not make sense for another. And, just because AI has been used successfully for a particular task in one training organization does not mean it will be equally successful for a different training subject matter, audience, or organizational context. That’s why developing intuitions about AI through hands-on experimentation in your own training environment is so critical.

Key takeaways

AI is a collection of distinct architectures, each suited to particular use cases and having important trade-offs. Currently—and most likely for the foreseeable future—no AI architecture reasons the way humans do nor understands either the physical world or human context well enough to fully compete with direct human experience. Still, under the right circumstances, AI can significantly increase individual and team productivity as well as unlock innovations that improve learning outcomes and business results.

Investing time in learning how AI architectures work is worthwhile because it helps you understand when and how to use AI. For instance, once you understand that LLM technology is based on word prediction, it’s easy to see why large language models can’t do math—even simple math like generating a response with a specific word count or counting the number of “Rs” in “strawberry”.

Understanding this limitation can steer you toward workarounds. For instance, you can use word-based descriptions in your prompt to specify your desired level of detail rather than a word count. Or, you can choose a platform that combines language processing with tools that are capable of doing math, such as models that can write and execute simple programs when you ask for numerical calculations.

Ultimately, AI is simply a collection of tools. Like all tools, its value depends on it being the right tool for the job as well as your skill at using it. An electric jigsaw is a wonderful tool if you need to make detailed cuts to wood or metal. But no matter how proficient you are at using a jigsaw, it will always be a terrible tool if you need to tighten a bolt. Think of AI the same way.

More broadly, diving into details about how AI works can help you better assess its risks and rewards so you can make a thoughtful decision about when and how to embrace it. A fuzzy understanding of AI can cloud your assessment of AI as a technology. It might entice you to accept the view of enthusiasts who promote unrealistic AI hype. Or, it might incline you to side with AI detractors who believe AI is a useless fad that will soon pass. These are both bad takes. Maintaining a realistic, balanced view of both the promise and the limitations of AI is critical to making the most of it.